How algorithms reproduce social and racial inequality

|

| Chauncey DeVega – SEPTEMBER 15, 2018 |

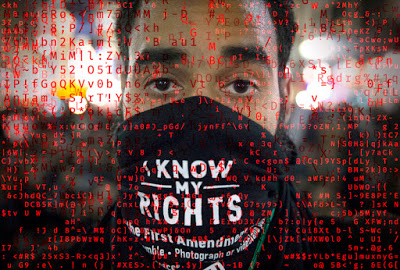

Though we can’t perceive them directly, we are surrounded by algorithms in our day-to-day lives: they govern the way we interact with our devices, use computers and ATMs, and move about the world.

Algorithms situated deep within computer programs and AIs help to manage traffic patterns on major roads and highways. Algorithms are also used by banks and other lenders to determine if a person is deemed “worthy” of a loan or credit card. Algorithms also determine how information is shared on search engines such as Google and through social media platforms such as Facebook. The Pentagon in conjunction with private industry are developing algorithms which will empower unmanned drones to independently decide how and in what circumstances to use lethal force against human beings.

Technology is not neutral. How it is used and for what ends reflects the social norms and values of a given culture. As such, in the United States and around the world, algorithms and other types of artificial intelligence often reproduce social inequality and serve the interests of the powerful — instead of being a way of creating a more equal, free and just social democracy.

What are some specific ways that computer algorithms produce such outcomes? How do Google and other search engines serve as a means of circulating racial and gender stereotypes about nonwhite women and girls as well as other marginalized groups? In an era where “big data” and algorithms are omnipresent, how are those technologies and practices actually undermining democracy — and by doing so advancing the cause of authoritarianism and other right-wing movements such as Trumpism? Are social media and other forms of digital technology doing harm to society by making people more lonely and alienated from society?

In an effort to answer these questions I recently spoke with professor Safiya Noble, a professor at the University of Southern California (USC) Annenberg School of Communication and the author of the new book “Algorithms of Oppression: How Search Engines Reinforce Racism“. Professor Noble is also a partner in Stratelligence, a firm that specializes in research on information and data science challenges. She is also a co-founder of the Information Ethics & Equity Institute, which provides training for organizations committed to transforming their information management practices toward more just, ethical, and equitable outcomes.

This conversation has been edited for clarity and length.

What narrative would you craft about the role of Facebook, Twitter, and the Internet more broadly in this political moment, when democracy is under siege in the United States and in other countries as well?

The public was sold a bill of goods about the liberatory possibilities of the Internet back in the 90s. When the full-on datafication of our society began to develop — and which of course we’re in now — and in which our every move is tracked and our almost every sense and every touch are collected and profiled, it is changing who we are and subverting our freedom. These processes have really undermined our civic liberties and civil rights. This is a perfect moment for the public to have become numb to losing control. Moreover, to have lost even the capacity to know they have lost control. This is the perfect moment for an autocratic authoritarian regime to be in place. We are seeing this now in the United States with Trump and with authoritarian movements around the world.

For example, when the Cambridge Analytica story broke around Facebook, I spoke to people and they told me, “Well, what can I do? I have to have [Facebook].” There isn’t really an easy way to withdraw from our participation in these big data projects. They’re weaponized and used against the public. This is also where we see the worship of corporations among the younger generations. Their primary cultural identification is with companies and less with movements or with values and ideas.

I used to be in advertising and now I’m an academic. I have watched this loss of personal control and power happen over the years and decades. The way that people now talk about their rights is about making room and space for big companies to have a huge influence in their lives. We’re going to come to a moment where the bottom is going to fall out of this social system and we’re going to have to make some really tough decisions.

Loneliness is a precondition for fascism and other types of authoritarian regimes of power. Research has shown that loneliness and social alienation were certainly large factors in why Trump’s voters flocked to him. What is the role of social media technologies in creating — and cultivating — this type of social malaise?

Loneliness is maybe more so a byproduct of our engagement with these technologies rather than loneliness or alienation being precursors or existing unto themselves. The total consumer experience is that when you lose real social power and real economic opportunity, then, you need a way to resolve the alienation that you are feeling. Social media or technology — these digital distractions — become a great way to deal with those feelings and to do other types of emotional labor. I teach my students, for example, about “fast fashion”. If you’ve talked to fashion scholars, they’ll tell you that, “Hey, listen, when you can’t afford to go to college because it’s a $100,000 now to get a degree. Man, it feels great to get two sweaters for 20 bucks at H&M.” This resolution or instant gratification need is very much related to the loss of real opportunity. The loss of real wages.

This is the reality of what people are experiencing, young people in particular. How could you not want to feel like there is someplace where you can get a high off of your endorphins? Of course, we know that the instant gratification experiences that people have in social media are important. It triggers the psychological and emotional stimulants that people are not getting get in other parts of their lives. Ultimately, I feel like the loneliness question is really more about alienation in the form of a lack of housing, the lack of real wages, the lack of access to affordable education. That is incredibly lonely and evokes real feelings of desperation for people. They simply turned to other places to feel gratified.

Where did the wrong idea that the Internet would save us by being a type of great social leveler and space to create opportunity and freedom come from?

The early Internet users and evangelists, if you will, always talked about the Internet as a site of liberation. That it would be this kind of nationless, borderless, raceless, classless, genderless place. This is in the early pre-commercial Internet days. Over time of course, as the Internet became wholly commercialized for many people, the Internet became Facebook. The Internet is Google. The average user couldn’t really understand what the Internet is beyond those sites. They are relegated to Google and its products as kind of a knowledge or sense-making tool that people rely upon.

People have come to relate to them as a form of democracy which is both hilarious and tragic. People engage with the Internet, which again is another corporate commercial space with lots of actors, as the site of democracy and their participation in it as somehow being democratic. Of course, we know that the Internet is a mirror of society. It is racialized, it is gendered, it is a class based experience.

All of the promises that those limits will be eradicated are false.

Unfortunately, many people feel like their participation online is a way of participating in real democracy. Too many people actually believe that because they can comment or otherwise participate online that it is a totally free and democratic space.

Algorithms are sequestering and creating markets, consumer markets of people who see certain kinds of messages or certain kinds of advertising. It’s not a free for all. It’s a highly curated space.

Democracy vis-a-vis technology and these platforms are not a replacement for real democracy on any level. If anything, they are foreclosing the possibilities of people thinking critically because what moves through these spaces is advertising-driven content. That’s actually very different from evidence-based research or other forms of knowledge which are critically important to democracy.

What are some examples of how algorithms and related technologies are reproducing social inequality?

These platforms stand in juxtaposition to public spheres of information that are increasingly shrinking. For example, the latter would include public schools, public libraries, public universities and public media. These are really important to creating a common education and a common knowledge base in our society. These are also very important sites of political struggles for fair representation, for historical accuracy, and accuracy in reporting the issues and other challenges we are facing in our society.

The public sphere is shrinking while the corporate controlled media is expanding.

What we are seeing is that those corporate actors who have the most capital are able to optimize their content to the top of the information pile. This is especially true with search engines. Those individuals and groups with a lot of technical skill are also able to optimize their presence. For example white nationalists have an incredible amount of technical skill. You wouldn’t believe how effective they are at controlling discourses about race and race relations. They are skilled at vilifying social movements like Black Lives Matter and other kinds of peoples movements because they are also well funded by right-wing organizations and billionaires.

If you have capital and technological skill then you can mate that with a third force, which is Silicon Valley, where people are working in developing these platforms. Many of these engineers and other designers cannot even recognize these social forces at work even while believing that they are developing platforms which are supposedly going to be great emancipators or liberators of knowledge. In sum, this is a real mess, which I think, is what has led to the rise of a new type of fascism. Such ideas are being normalized online. People who are deeply disenfranchised, those people in the cross hairs of racist and sexist policy in the United States and elsewhere don’t have a lot of capital. They also may or may not have a lot of technical prowess. And they certainly are not deeply embedded in Silicon Valley.

How do you respond to the counterargument that these are “just” “technologies” which are “neutral” and they cannot possibly be “racist or sexist?”

That’s the classic Oppenheimer response, which is we had to build the H-bomb because it was “science.” “We had to deal with what is in the best interest of science and the application of the research is up to politicians.” These are actually social issues. This is typically the way Silicon Valley engineers talk about the work they do, which is to say, “We build tools and they’re neutral. If they get corrupted, it’s because of the way people engage with them and use them.”

First of all, we know that computer languages are called languages because they’re subjective. If programming languages are subjective then they are highly interpretable. For example, a search engine is a particular type of AI.

AI is about automated decision-making. If these conditions are present, then these kinds of actions should be made. You cannot have logic and automated decision making without a subjective process of thinking about what to prioritize and when.

Those are not flat issues of math. They are deeply subjective. In fact, Google itself has said that there are over 200 factors that go into developing its search technology and how it prioritizes information. Well, what are they? We can’t see them, but we can certainly look at the outcomes.

The code is trying to cater to a perceived audience which is in the majority. This is one of the reasons why girls have been synonymous with pornography in terms of search engine results — because the imagined audience of who’s looking for “girls” in a particular way are men most likely looking for porn. That’s a subjective decision being made with the design of the algorithm and AI.

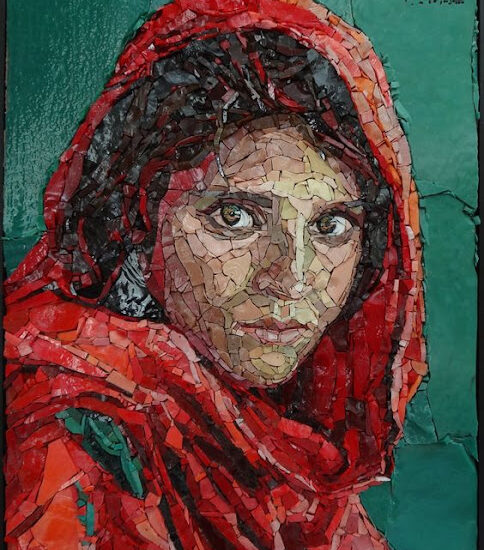

What do we know about how Google and other search engines reinforce racism and sexism as shown by the search term “black girls”?

For many years, up until about the fall of 2012, the first result would be “sugaryblackpussy.com” followed by a host of porn sites or other deeply sexualized kinds of websites. That was 80 percent of the content on the first page — which of course is the most important, because most people do not usually go past the first page. For many years pornography was the primary representation for black girls.

This is also true for Latina and Asian girls. Google changed the algorithm on “black girls” because of pushback and controversy. There’s been a lot of talk about it for several years. With these search engine results pornography was the dominant representation for nonwhite women and girls online. This is highly consistent with the dominant stereotypical narratives which are used to legitimate the disenfranchising of nonwhite women and girls in American and global society. Pornography is not primarily about sexual liberation. Pornography is also a function and reflection of capitalism and violent control over women’s bodies and women’s sexuality.

When these search engine results are being generated, what types of decisions are being made by the algorithm?

Google’s web crawlers are looking to see what is popular. They are also looking to see what’s massively hyperlinked. They’re also trying to figure out what is fraudulent. There is a serious and genuine effort because Google, for example, can’t have certain kinds of content come to the front page which may be illegal in certain countries.

On another level though, you have people who are optimizing content using tools such as Google Adwords. They will pay to have their content more visible and highly ranked.

This is really important when we talk about democracy — people who are in the numerical minority are never going to be able to be advantaged especially if they do not have access to monetary and other types of resources. In terms of politics the ability to game the system is really important, because certain actors are able to make sure their information is widely circulated regardless of it is true, dangerous, sexist, racist or the like. And because the Internet is so closely connected the trends we see in one part of it — for example search engine results — then show up on YouTube, social media and elsewhere. Bad information flourishes on YouTube for example because people are trying to buy keywords and optimize the worst content to influence how people think.

There was a recent case where an AI “learned” to be racist and sexist because of its interactions with users on the Internet. Is this a classic example of “garbage in garbage out”?

It’s also going to not learn about anti-racist and anti-sexist values because those results may not be as optimized or is visible. If people are able to game certain things into popularity or purchase certain kinds of ideas into popularity, then that will be the dominant logic that trains the AI. I’m actually repulsed by the idea of “artificial intelligence” because intelligence in human beings is more than just textual analysis or image analysis or image processing. We also have feelings and emotions.

Neuroscientists will tell you that no amount of mapping of a technical network will ever be able to produce those dimensions of our intelligence. This idea that AI is superior to human intelligence is a farce because there are multiple dimensions of our humanity that cannot be replicated by machines. To your point, the machine is being trained on data that is biased. Data that is dangerous. Data that may not even be truthful or factual and then it will be used to replace human logic.

This is the part that becomes really challenging because one of the things I often say is, “If an algorithm discriminates against you, you can’t take it to court.” We don’t have a legal and social framework for intervening against these projects either. We don’t have an international body that we can appeal to when AI violates our human rights.

With financial deregulation and the rolling back of civil rights laws there have been many documented examples of computer programs making financial decisions which disproportionately hurt (and in some cases specifically targeted) blacks, Latinos and other nonwhites. Billions of dollars in wealth was destroyed as a result. This was and is a clear example of individuals who are biased then interacting with algorithms and other technologies which are also discriminating against nonwhites.

Wall Street bet against the American people and effectively decimated the economy and real estate market. Now, guess what? Data is going to be used to build and perfect the next generation of real estate, software and machine learning. It’s going to learn that, “Oh, all of these African-Americans and Latinos who defaulted on their home loans? Those people are not reliable for real estate invest investment.”

This is a perfect example of how data gets constructed under discriminatory predatory practices. The rules and “logic” then become normalized as a baseline for the future. This is the kind of thing that is going to be incredibly difficult to intervene against. The courts and judges do not have the kind of data literacy that they would need to even adjudicate these kinds of cases.

There is a crisis of digital literacy in American society. There is also a crisis of civil literacy as well. We also have engineers and other technologists who know how to make machines and write computer programs but who often lack any philosophical, moral, or ethical framework to guide their research. How would you address these very serious problems?

You cannot design technology for society if you don’t know anything about society. We’re going to have to engage people with deep knowledge about society. These are scholars who study post colonialism, ethnic studies, women’s studies and sociology. Humanists — especially philosophers who study ethics and morality — will have to be in the driver’s seat to put the brakes on some of the choices which are being made. Technology is designed in a R&D lab and then there is no public discussion about whether we as a society even want these things or not. Certainly, there’s no long-term study about the social consequences or political consequences of these new technologies.